Publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2026

- EACL ’26

Rethinking Creativity Evaluation: A Critical Analysis of Existing Creativity EvaluationsLi-Chun Lu, Miri Liu, Pin-Chun Lu, Yufei Tian, Shao-Hua Sun, and Nanyun PengTo appear in Proceedings of the European Chapter of the Association for Computational Linguistics (EACL), 2026

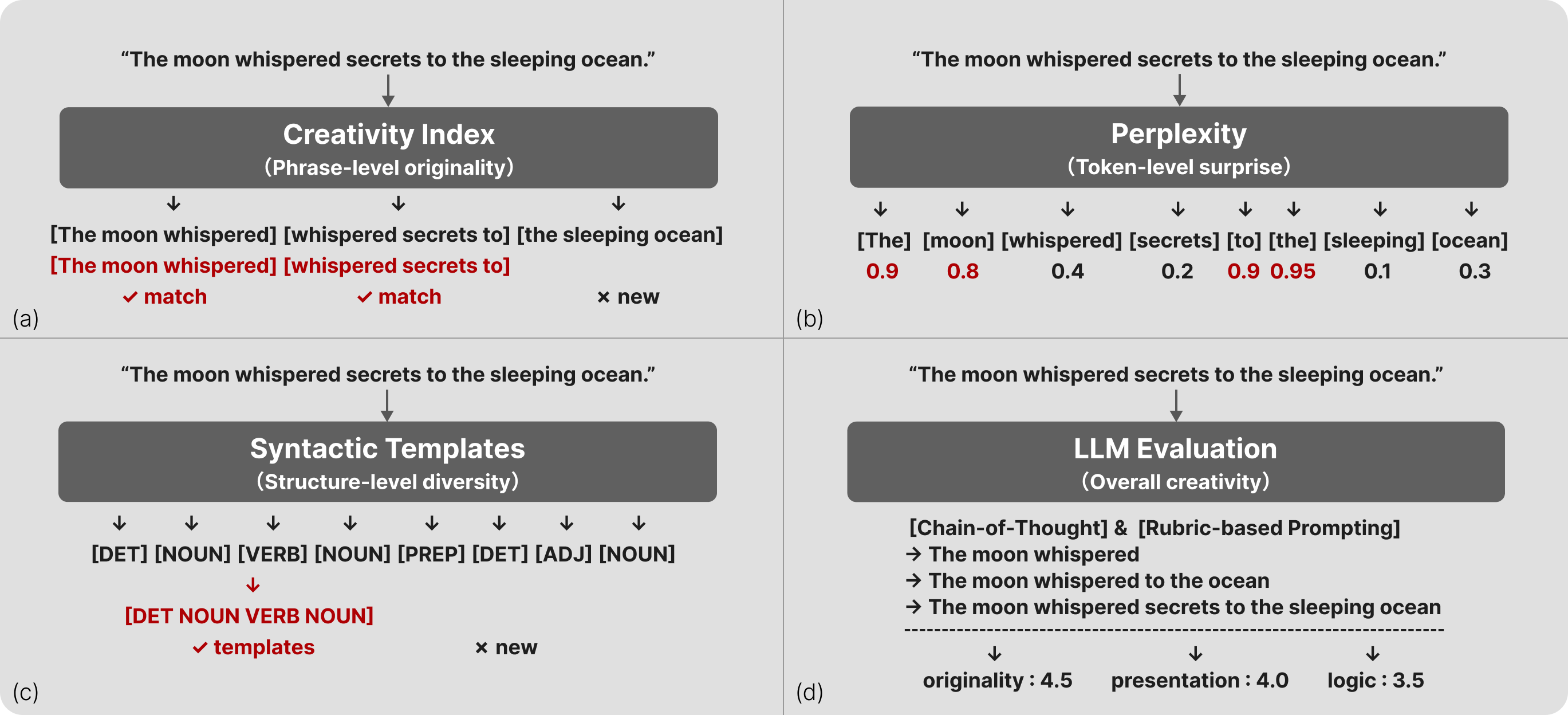

Rethinking Creativity Evaluation: A Critical Analysis of Existing Creativity EvaluationsLi-Chun Lu, Miri Liu, Pin-Chun Lu, Yufei Tian, Shao-Hua Sun, and Nanyun PengTo appear in Proceedings of the European Chapter of the Association for Computational Linguistics (EACL), 2026We systematically examine, analyze, and compare representative creativity measures–creativity index, perplexity, syntactic templates, and LLM-as-a-Judge–across diverse creative domains, including creative writing, unconventional problem-solving, and research ideation. Our analyses reveal that these metrics exhibit limited consistency, capturing different dimensions of creativity. We highlight key limitations, including the creativity index’s focus on lexical diversity, perplexity’s sensitivity to model confidence, and syntactic templates’ inability to capture conceptual creativity. Additionally, LLM-as-a-Judge shows instability and bias. Our findings underscore the need for more robust, generalizable evaluation frameworks that better align with human judgments of creativity.

@article{lu2025rethinking, title = {Rethinking Creativity Evaluation: A Critical Analysis of Existing Creativity Evaluations}, author = {Lu, Li-Chun and Liu, Miri and Lu, Pin-Chun and Tian, Yufei and Sun, Shao-Hua and Peng, Nanyun}, journal = {To appear in Proceedings of the European Chapter of the Association for Computational Linguistics (EACL)}, year = {2026}, } - EACL ’26

BILLY: Steering Large Language Models via Merging Persona Vectors for Creative GenerationTsung-Min Pai, Jui-I Wang, Li-Chun Lu, Shao-Hua Sun, Hung-Yi Lee, and Kai-Wei ChangTo appear in Proceedings of the European Chapter of the Association for Computational Linguistics (EACL), 2026

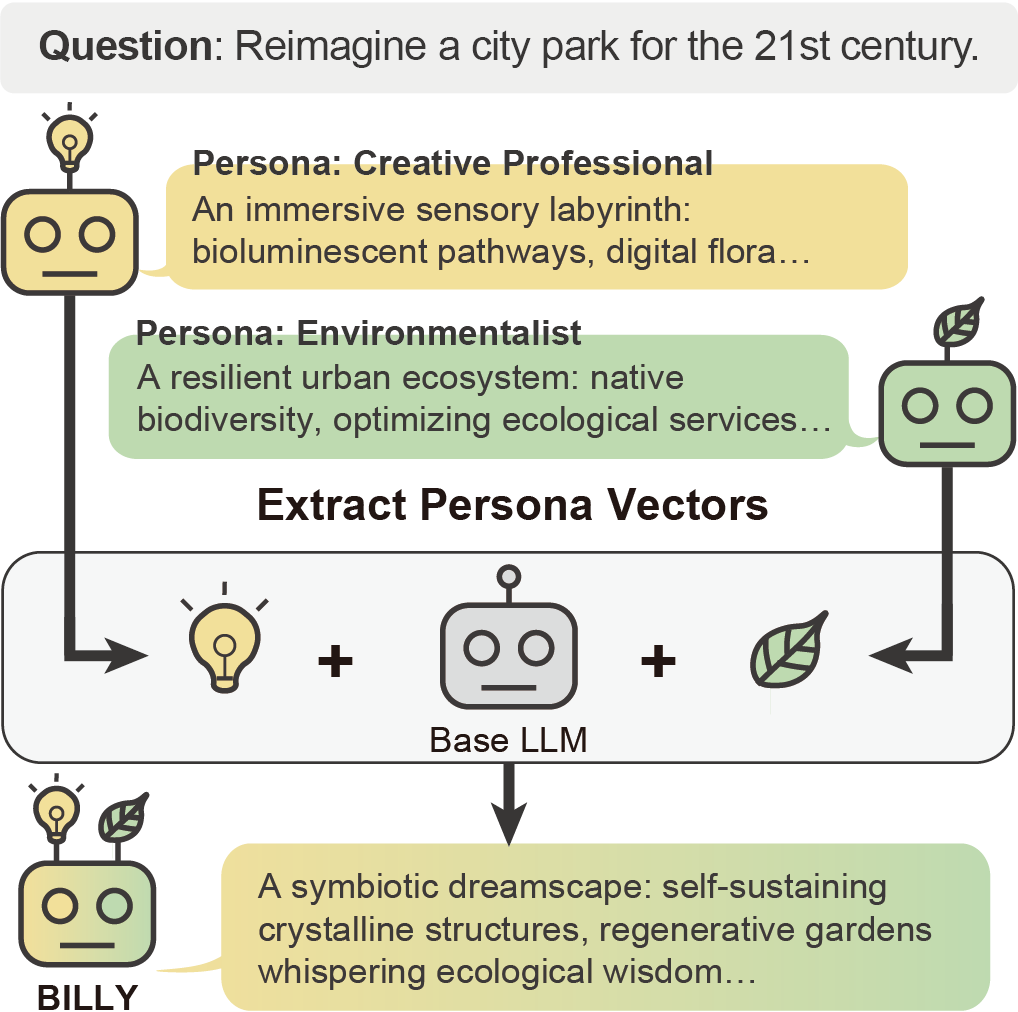

BILLY: Steering Large Language Models via Merging Persona Vectors for Creative GenerationTsung-Min Pai, Jui-I Wang, Li-Chun Lu, Shao-Hua Sun, Hung-Yi Lee, and Kai-Wei ChangTo appear in Proceedings of the European Chapter of the Association for Computational Linguistics (EACL), 2026Multi-LLM systems enhance the creativity of large language models by simulating human collective intelligence but suffer from significant drawbacks, such as high computational costs and inference latency. To address these limitations, we propose BILLY (BlendIng persona vectors for Large Language model creativitY), a training-free framework that captures the benefits of multi-LLM collaboration, i.e. inducing diverse perspectives and specialized expertise, within a single model. BILLY operates by extracting and blending multiple distinct persona vectors directly in the model’s activation space. We steer the model’s generation process with this merged vector while inference, enabling multi-perspective output without explicit multi-LLM communication. Our experiments across creativity-oriented benchmarks demonstrate that BILLY surpasses single model prompting and traditional multi-LLM approaches, while substantially reducing inference time and computational costs. Our analyses further reveal that distinct persona vectors can be blended to achieve both effective control over complementary aspects of generation and greater interpretability.

@article{pai2025billy, title = {BILLY: Steering Large Language Models via Merging Persona Vectors for Creative Generation}, author = {Pai, Tsung-Min and Wang, Jui-I and Lu, Li-Chun and Sun, Shao-Hua and Lee, Hung-Yi and Chang, Kai-Wei}, journal = {To appear in Proceedings of the European Chapter of the Association for Computational Linguistics (EACL)}, year = {2026} }

2025

- ICLR ’25

Dynamic-SUPERB Phase-2: A Collaboratively Expanding Benchmark for Measuring the Capabilities of Spoken Language Models with 180 TasksChien-yu Huang, Wei-Chih Chen, Shu-wen Yang, Andy T. Liu, Chen-An Li, Yu-Xiang Lin, Wei-Cheng Tseng, Anuj Diwan, Yi-Jen Shih, Jiatong Shi, and 68 more authorsIn The Thirteenth International Conference on Learning Representations, 2025

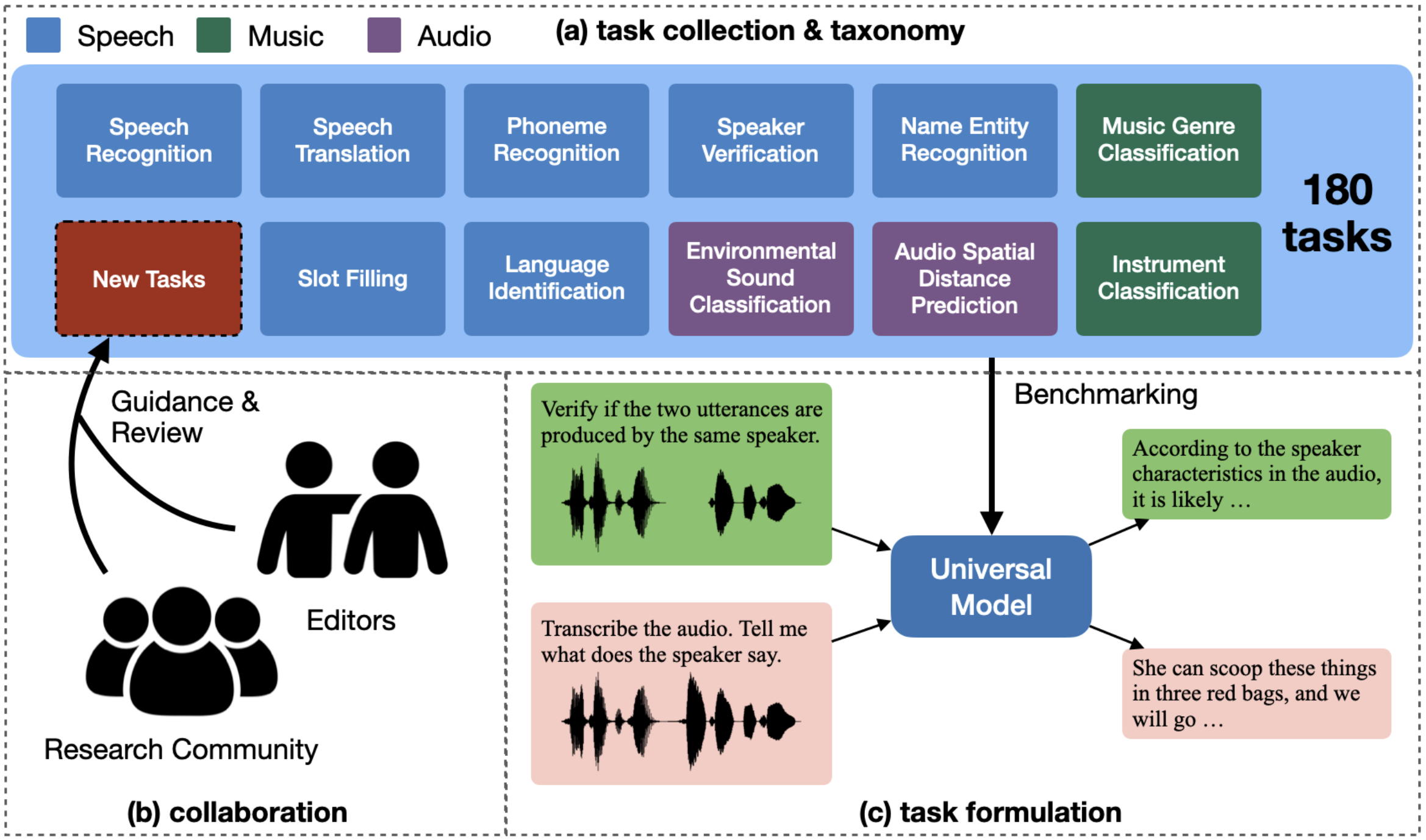

Dynamic-SUPERB Phase-2: A Collaboratively Expanding Benchmark for Measuring the Capabilities of Spoken Language Models with 180 TasksChien-yu Huang, Wei-Chih Chen, Shu-wen Yang, Andy T. Liu, Chen-An Li, Yu-Xiang Lin, Wei-Cheng Tseng, Anuj Diwan, Yi-Jen Shih, Jiatong Shi, and 68 more authorsIn The Thirteenth International Conference on Learning Representations, 2025Multimodal foundation models, such as Gemini and ChatGPT, have revolutionized human-machine interactions by seamlessly integrating various forms of data. Developing a universal spoken language model that comprehends a wide range of natural language instructions is critical for bridging communication gaps and facilitating more intuitive interactions. However, the absence of a comprehensive evaluation benchmark poses a significant challenge. We present Dynamic-SUPERB Phase-2, an open and evolving benchmark for the comprehensive evaluation of instruction-based universal speech models. Building upon the first generation, this second version incorporates 125 new tasks contributed collaboratively by the global research community, expanding the benchmark to a total of 180 tasks, making it the largest benchmark for speech and audio evaluation. While the first generation of Dynamic-SUPERB was limited to classification tasks, Dynamic-SUPERB Phase-2 broadens its evaluation capabilities by introducing a wide array of novel and diverse tasks, including regression and sequence generation, across speech, music, and environmental audio. Evaluation results indicate that none of the models performed well universally. SALMONN-13B excelled in English ASR, while WavLLM demonstrated high accuracy in emotion recognition, but current models still require further innovations to handle a broader range of tasks. We will soon open-source all task data and the evaluation pipeline.

@inproceedings{huangdynamic, title = {Dynamic-SUPERB Phase-2: A Collaboratively Expanding Benchmark for Measuring the Capabilities of Spoken Language Models with 180 Tasks}, author = {Huang, Chien-yu and Chen, Wei-Chih and Yang, Shu-wen and Liu, Andy T. and Li, Chen-An and Lin, Yu-Xiang and Tseng, Wei-Cheng and Diwan, Anuj and Shih, Yi-Jen and Shi, Jiatong and Chen, William and Chen, Xuanjun and Hsiao, Chi-Yuan and Peng, Puyuan and Wang, Shih-Heng and Kuan, Chun-Yi and Lu, Ke-Han and Chang, Kai-Wei and Yang, Chih-Kai and Ritter-Gutierrez, Fabian and Chuang, Ming To and Huang, Kuan-Po and Arora, Siddhant and Lin, You-Kuan and Yeo, Eunjung and Chang, Kalvin and Chien, Chung-Ming and Choi, Kwanghee and Hsieh, Cheng-Hsiu and Lin, Yi-Cheng and Yu, Chee-En and Chiu, I-Hsiang and Guimarães, Heitor R. and Han, Jionghao and Lin, Tzu-Quan and Lin, Tzu-Yuan and Chang, Homu and Chang, Ting-Wu and Chen, Chun Wei and Chen, Shou-Jen and Chen, Yu-Hua and Cheng, Hsi-Chun and Dhawan, Kunal and Fang, Jia-Lin and Fang, Shi-Xin and Chiang, Kuan-Yu Fang and Fu, Chi An and Hsiao, Hsien-Fu and Hsu, Ching Yu and Huang, Shao-Syuan and Wei, Lee Chen and Lin, Hsi-Che and Lin, Hsuan-Hao and Lin, Hsuan-Ting and Lin, Jian-Ren and Liu, Ting-Chun and Lu, Li-Chun and Pai, Tsung-Min and Pasad, Ankita and Kuan, Shih-Yun Shan and Shon, Suwon and Tang, Yuxun and Tsai, Yun-Shao and Wei, Jui-Chiang and Wei, Tzu-Chieh and Wu, Chengxi and Wu, Dien-Ruei and Yang, Chao-Han Huck and Yang, Chieh-Chi and Yip, Jia Qi and Yuan, Shao-Xiang and Noroozi, Vahid and Chen, Zhehuai and Wu, Haibin and Livescu, Karen and Harwath, David and Watanabe, Shinji and Lee, Hung-yi}, booktitle = {The Thirteenth International Conference on Learning Representations}, year = {2025}, url = {https://arxiv.org/abs/2411.05361}, }

2024

- COLM ’24

LLM Discussion: Enhancing the Creativity of Large Language Models via Discussion Framework and Role-PlayLi-Chun Lu*, Shou-Jen Chen*, Tsung-Min Pai, Chan-Hung Yu, Hung-yi Lee, and Shao-Hua SunIn First Conference on Language Modeling, 2024

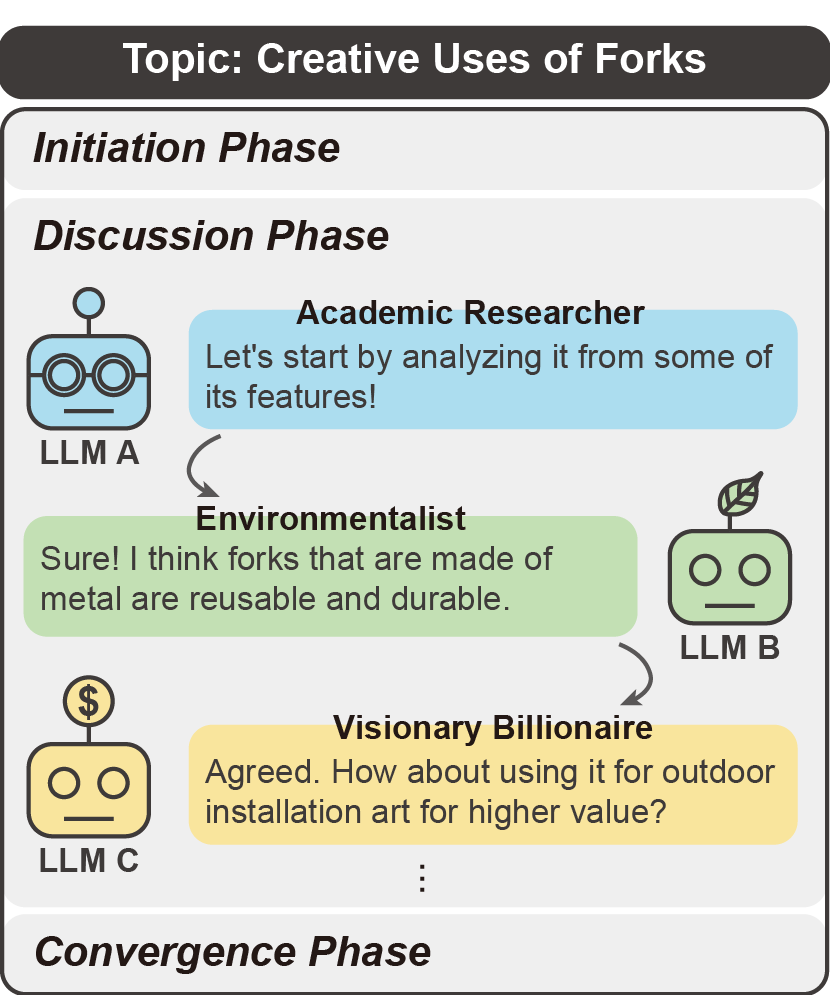

LLM Discussion: Enhancing the Creativity of Large Language Models via Discussion Framework and Role-PlayLi-Chun Lu*, Shou-Jen Chen*, Tsung-Min Pai, Chan-Hung Yu, Hung-yi Lee, and Shao-Hua SunIn First Conference on Language Modeling, 2024Large language models (LLMs) have shown exceptional proficiency in natural language processing but often fall short of generating creative and original responses to open-ended questions. To enhance LLM creativity, our key insight is to emulate the human process of inducing collective creativity through engaging discussions with participants from diverse backgrounds and perspectives. To this end, we propose LLM Discussion, a three-phase discussion framework that facilitates vigorous and diverging idea exchanges and ensures convergence to creative answers. Moreover, we adopt a role-playing technique by assigning distinct roles to LLMs to combat the homogeneity of LLMs. We evaluate the efficacy of the proposed framework with the Alternative Uses Test, Similarities Test, Instances Test, and Scientific Creativity Test through both LLM evaluation and human study. The results show that our proposed framework outperforms single-LLM approaches and existing multi-LLM frameworks across various creativity metrics.

@inproceedings{lullm, title = {LLM Discussion: Enhancing the Creativity of Large Language Models via Discussion Framework and Role-Play}, author = {Lu, Li-Chun and Chen, Shou-Jen and Pai, Tsung-Min and Yu, Chan-Hung and Lee, Hung-yi and Sun, Shao-Hua}, booktitle = {First Conference on Language Modeling}, year = {2024}, url = {https://arxiv.org/abs/2405.06373}, } - CHI ’24

VeeR: Exploring the Feasibility of Deliberately Designing VR Motion that Diverges from Mundane, Everyday Physical Motion to Create More Entertaining VR ExperiencesPin-Chun Lu, Che-Wei Wang, Yu Lun Hsu, Alvaro Lopez, Ching-Yi Tsai, Chiao-Ju Chang, Wei Tian Mireille Tan, Li-Chun Lu, and Mike Y. ChenIn Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, 2024

VeeR: Exploring the Feasibility of Deliberately Designing VR Motion that Diverges from Mundane, Everyday Physical Motion to Create More Entertaining VR ExperiencesPin-Chun Lu, Che-Wei Wang, Yu Lun Hsu, Alvaro Lopez, Ching-Yi Tsai, Chiao-Ju Chang, Wei Tian Mireille Tan, Li-Chun Lu, and Mike Y. ChenIn Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, 2024This paper explores the feasibility of deliberately designing VR motion that diverges from users’ physical movements to turn mundane, everyday transportation motion (e.g., metros, trains, and cars) into more entertaining VR motion experiences, in contrast to prior car-based VR approaches that synchronize VR motion to physical car movement exactly. To gain insight into users’ preferences for veering rate and veering direction for turning (left/right) and pitching (up/down) during the three phases of acceleration (accelerating, cruising, and decelerating), we conducted a formative, perceptual study (n=24) followed by a VR experience evaluation (n=18), all conducted on metro trains moving in a mundane, straight-line motion. Results showed that participants preferred relatively high veering rates, and preferred pitching upward during acceleration and downward during deceleration. Furthermore, while veering decreased comfort as expected, it significantly enhanced immersion (p<.01) and entertainment (p<.001) and the overall experience, with comfort being considered, was preferred by 89% of participants.

@inproceedings{10.1145/3613904.3642064, author = {Lu, Pin-Chun and Wang, Che-Wei and Hsu, Yu Lun and Lopez, Alvaro and Tsai, Ching-Yi and Chang, Chiao-Ju and Tan, Wei Tian Mireille and Lu, Li-Chun and Chen, Mike Y.}, title = {VeeR: Exploring the Feasibility of Deliberately Designing VR Motion that Diverges from Mundane, Everyday Physical Motion to Create More Entertaining VR Experiences}, year = {2024}, isbn = {9798400703300}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3613904.3642064}, doi = {10.1145/3613904.3642064}, booktitle = {Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems}, articleno = {424}, numpages = {13}, } - CHI ’24 Runner Up

AnimalSense: Understanding Beyond-human Sensory Capabilities of Animals via VR GamesYu Lun Hsu, Chien-Ting Lu, Li-Chun Lu, Chih-Heng Tam, Yu-Chieh Sun, and Ting-Kang WangIn Extended Abstracts of the 2024 CHI Conference on Human Factors in Computing Systems, 2024

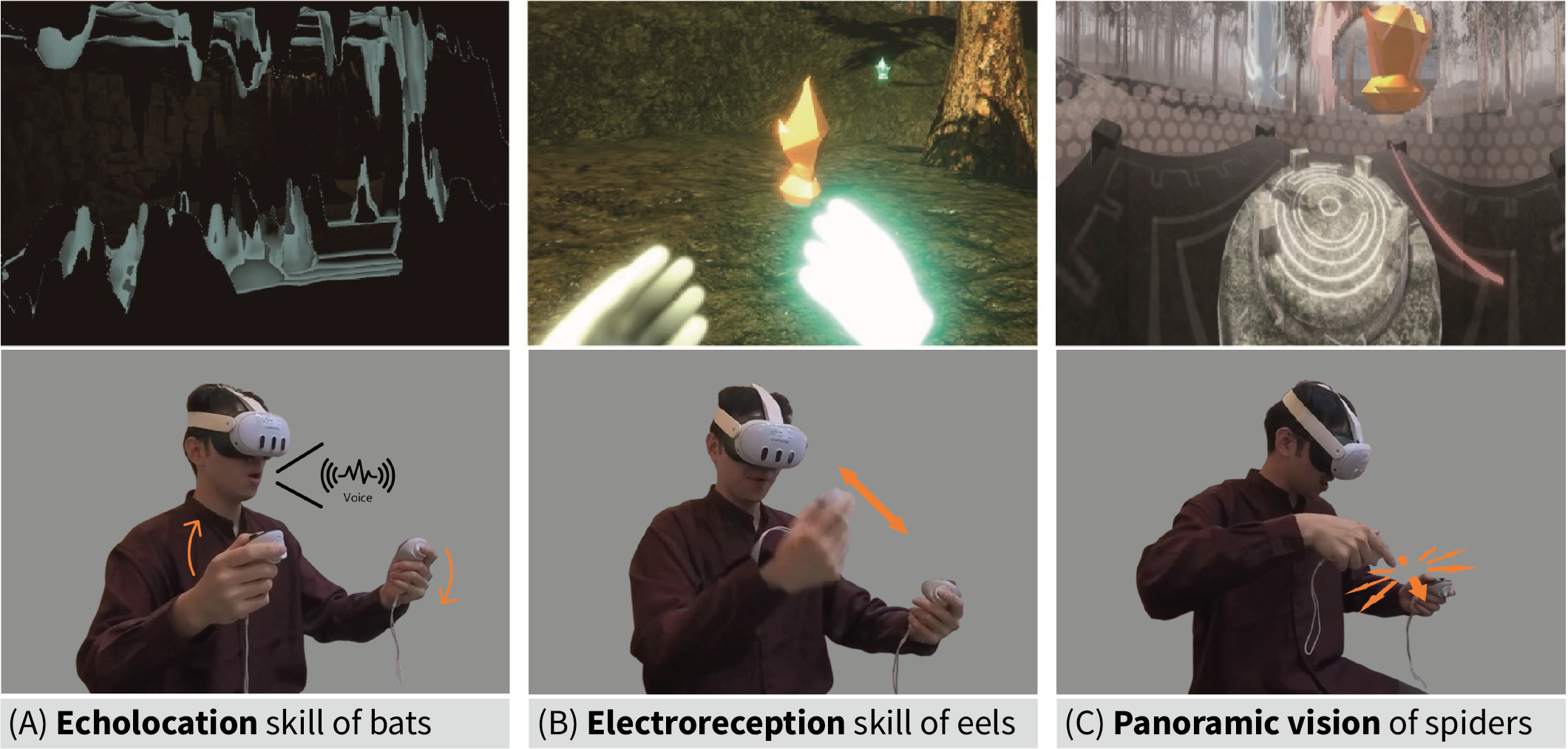

AnimalSense: Understanding Beyond-human Sensory Capabilities of Animals via VR GamesYu Lun Hsu, Chien-Ting Lu, Li-Chun Lu, Chih-Heng Tam, Yu-Chieh Sun, and Ting-Kang WangIn Extended Abstracts of the 2024 CHI Conference on Human Factors in Computing Systems, 2024Animals have sensory capabilities far beyond those of humans, including the ability to detect ultrasound, UV light, electric fields, magnetic fields, and to have panoramic vision. AnimalSense is a VR game crafted to immerse players in the extraordinary sensory worlds of animals. The game’s design leverages the concepts of sensory substitution, sensory remapping, and active learning, allowing users to explore and utilize these beyond-human sensory capabilities to overcome game challenges, thereby enhancing players’ understanding of animals’ unique senses. This paper presents three such game section designs: 1) echolocation in bats, 2) electroreception in eels, and 3) panoramic vision in spiders, showcasing how these are integrated into the gameplay.

@inproceedings{10.1145/3613905.3648102, author = {Hsu, Yu Lun and Lu, Chien-Ting and Lu, Li-Chun and Tam, Chih-Heng and Sun, Yu-Chieh and Wang, Ting-Kang}, title = {AnimalSense: Understanding Beyond-human Sensory Capabilities of Animals via VR Games}, year = {2024}, isbn = {9798400703317}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3613905.3648102}, doi = {10.1145/3613905.3648102}, booktitle = {Extended Abstracts of the 2024 CHI Conference on Human Factors in Computing Systems}, articleno = {632}, numpages = {6}, keywords = {Animal Comprehension, Animals, Perceptual ranges, Sensory capabilities, Virtual Reality}, }